Diffusion Models for Clearer Skies

Advancing Background Removal in Space Surveillance

Tracking satellites and space debris is becoming increasingly important as Earth’s orbital environment grows more congested. Yet, detecting small and faint objects against the backdrop of stars, atmospheric effects, and instrument noise is a difficult challenge. Traditional approaches to image processing struggle when signals are weak, or when conditions such as twilight or partial cloud cover obscure observations. To push the limits of what can be detected, we need smarter ways to remove background noise while preserving even the faintest traces of satellites.

Here we introduce a powerful new method: the use of denoising diffusion neural networks (DDNNs). These are a class of generative artificial intelligence models originally developed for producing highly realistic images. Here, they are re-purposed to learn the subtle noise patterns present in optical sensors, atmospheric conditions, and astronomical backgrounds and then remove them adaptively from real observational data.

A New Frontier for Background Removal

In space situational awareness (SSA), the ability to suppress noise directly improves the fidelity of satellite detection. Better background removal means smaller and fainter objects can be identified, even in high orbits or during less-than-ideal conditions. It also improves the accuracy of photometric calibration, which is critical for characterising the size, shape, and rotation of resident space objects (RSOs) by studying their reflected light.

Unlike earlier methods that rely on prior assumptions about the detector or the sky, DDNNs are trained directly on raw telescope images. The network learns the combined effects of star streaks, atmospheric variations, detector imperfections, and other non-linear distortions, all without the need for manual pre-processing. Once trained, the model can generate a “noise map” that captures these systematic effects and can be subtracted from new observations.

Training on Real Observations

To develop the method, the team used observations from Spaceflux’s New Mexico sensor. Images of geostationary satellites were collected with exposure times between 0.1 and 2 seconds. Importantly, no standard calibration steps such as flat-fielding were applied. Instead, the raw data – including detector banding, background stars, and atmospheric distortions – were used directly, allowing the DDNN to learn the true noise behaviour of the instrument.

The training process involves gradually degrading these images into random noise and then teaching the network to reverse the process. By repeating this forward-and-backwards cycle thousands of times, the model learns the difference between structured signals (like star streaks or detector noise) and the random white noise that cannot be modeled.

Sharper Detections in Noisy Regimes

When applied to test images, the DDNN successfully separated instrument artefacts from true signals. In one case,an artificial faint satellite was injected into the raw data. After processing, the background star streaks and detector noise were removed while the satellite remained clearly visible. The signal-to-noise ratio improved by around 60%, a substantial boost in detection capability.

Analysis of the images also showed that large-scale structures, such as sky gradients or instrument patterns, were strongly suppressed, leaving behind cleaner residuals. This kind of performance is especially promising for ultra-low signal-to-noise observations, such as small objects in geostationary or cis-lunar orbits, where every photon counts.

Implications and Future Work

This proof-of-concept shows that diffusion models, once the preserve of cutting-edge computer vision research, can play a transformative role in SSA. By learning from the data itself, they offer a generalised solution that can adapt to different instruments, sites, and observing conditions. The approach can be integrated directly into existing image stacking pipelines, improving the reliability of satellite tracking across diverse regimes.

While the study focused on geostationary observations, the methodology can be extended to low Earth orbit and twilight conditions. Further refinements in network design and hyperparameter optimisation are expected to improve results even more. In time, techniques like this could become a standard tool in the SSA and Space Domain Awareness (SDA) toolkit, helping to ensure safer and more sustainable use of Earth’s orbits.

This work was published as AMOS conference paper, see link below for more details.

Further Reading / References

For background on diffusion models in computer vision, see Ho et al. (2020), “Denoising Diffusion Probabilistic Models” and Croitoru et al. (2022), “Diffusion Models in Vision: A Survey”.

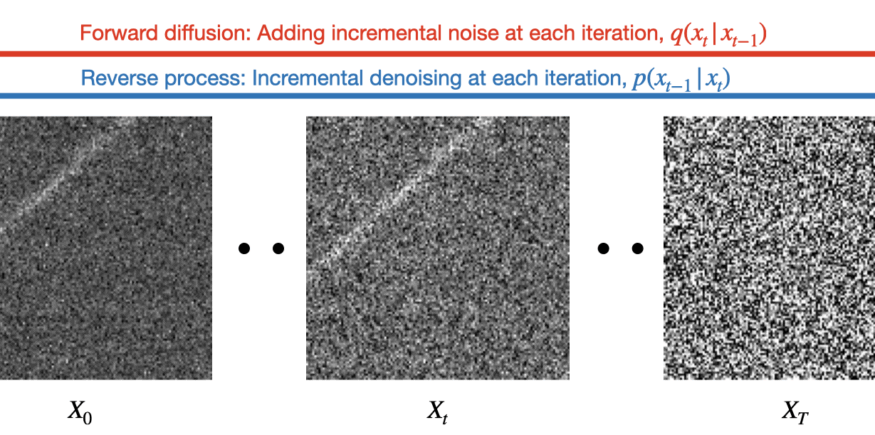

training, the algorithm is trained using the forward process. During production, we use the inverse process to de-trend

our data. During training, we incrementally add Gaussian noise to our observed raw data (X0) for T number of steps.

We then learn the mapping between Xt and Xt+1 until convergence. During inference, we compute the incremental

inverse steps going from a noise distribution XT to the data distribution X0. Note that X0 in this figure is real, observed

data. No data simulations were used in this paper.

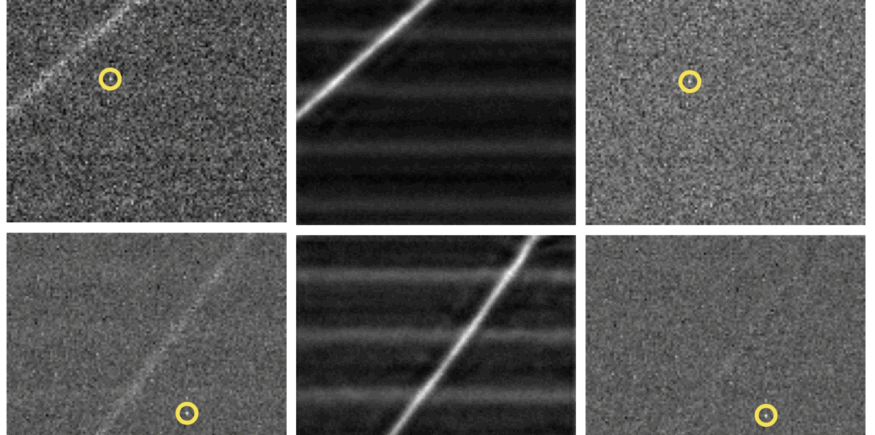

We note that this is real observed data with the RSO PSF injected. Middle: Derived DDNN map ( p (x0)) given raw

observed input. The stellar streak as well as the CMOS banding noise can be seen clearly. Right: Residual image after

subtracting the diffusion map from raw input. The remaining satellite is marked by a yellow circle.